Projection and idempotent matrix (BR Chapter 6)¶

Highlights: In this lecture, we show any idempotent matrix $\mathbf{P}$ is a projector into $\mathcal{C}(\mathbf{P})$ along $\mathcal{C}(\mathbf{I} - \mathbf{P})$.

Note when many authors say projection or projectors, they actually mean orthogonal projection or orthogonal projectors, which are subject of next lecture. In this course, we differentiate projection and orthogonal projection.

Direct sum¶

BR Theorem 6.1. Dimension of a sum of subspaces. Let $\mathcal{S}_1$ and $\mathcal{S}_2$ be two subspaces in $\mathbb{R}^n$. Then $$ \text{dim}(\mathcal{S}_1 + \mathcal{S}_2) = \text{dim}(\mathcal{S}_1) + \text{dim}(\mathcal{S}_2) - \text{dim}(\mathcal{S}_1 \cap \mathcal{S}_2). $$

Proof: Read BR p156-157.

BR Corollary 6.1.

- Subadditivity of dim function. Let $\mathcal{S}_1$ and $\mathcal{S}_2$ be two subspaces in $\mathbb{R}^n$. Then $$ \text{dim}(\mathcal{S}_1 + \mathcal{S}_2) \le \text{dim}(\mathcal{S}_1) + \text{dim}(\mathcal{S}_2). $$

Subadditivity of rank function. Let $\mathbf{A}$ and $\mathbf{B}$ be two matrices of the same order. Then

$$ \text{rank}(\mathbf{A} + \mathbf{B}) \le \text{rank}(\mathbf{A}) + \text{rank}(\mathbf{B}). $$Proof: First claim trivially follows from BR Theorem 6.1. For the second claim. First note $\mathcal{C}(\mathbf{A} + \mathbf{B}) \subseteq \mathcal{C}(\mathbf{A}) + \mathcal{C}(\mathbf{B})$ (why). Then \begin{eqnarray*} & & \text{rank}(\mathbf{A} + \mathbf{B}) \\ &=& \text{dim}(\mathcal{C}(\mathbf{A} + \mathbf{B})) \quad \text{(definition of rank)} \\ &\le& \text{dim}(\mathcal{C}(\mathbf{A}) + \mathcal{C}(\mathbf{B})) \quad \text{(monotonicity of dim)} \\ &\le& \text{dim}(\mathcal{C}(\mathbf{A})) + \text{dim}(\mathcal{C}(\mathbf{B})) \quad \text{(subadditivity of dim)} \\ &=& \text{rank}(\mathbf{A}) + \text{rank}(\mathbf{B}). \quad \text{(definition of rank)} \end{eqnarray*} The second inequality follows from the first claim.

Two subspaces $\mathcal{S}_1$ and $\mathcal{S}_2$ in a vector space $\mathcal{V}$ are said to be complementary whenever $$ \mathcal{V} = \mathcal{S}_1 + \mathcal{S}_2 \text{ and } \mathcal{S}_1 \cap \mathcal{S}_2 = \{\mathbf{0}\}. $$ In such cases, we say $\mathcal{V}$ is a direct sum of $\mathcal{S}_1$ and $\mathcal{S}_2$ and denote $\mathcal{V} = \mathcal{S}_1 \oplus \mathcal{S}_2$.

TODO: visualize. $\mathbb{R}^3 = \text{a plane} \oplus \text{a line}$.

BR Theorem 6.2. Let $\mathcal{S}_1, \mathcal{S}_2$ be two subspaces of same order and $\mathcal{V} = \mathcal{S}_1 + \mathcal{S}_2$. Following statements are equivalent:

- $\mathcal{V} = \mathcal{S}_1 \oplus \mathcal{S}_2$.

- $\text{dim}(\mathcal{V}) = \text{dim}(\mathcal{S}_1) + \text{dim}(\mathcal{S}_2)$.

Any vector $\mathbf{x} \in \mathcal{V}$ can be uniquely represented as $$ \mathbf{x} = \mathbf{x}_1 + \mathbf{x}_2, \text{ where } \mathbf{x}_1 \in \mathbf{S}_1, \mathbf{x}_2 \in \mathbf{S}_2. $$ We will refer to this as the unique representation or unique decomposition property of direct sums.

Proof: We show that $1 \Rightarrow 2 \Rightarrow 3 \Rightarrow 1$.

$1 \Rightarrow 2$: By definition of direct sum, we know $\mathcal{S}_1 \cap \mathcal{S}_2 = \{\mathbf{0}\}$. Thus by BR Theorem 6.1, $\text{dim}(\mathcal{S}_1 + \mathcal{S}_2) = \text{dim}(\mathcal{S}_1) + \text{dim}(\mathcal{S}_2)$.

$2 \Rightarrow 3$: By 2, we know $\text{dim}(\mathcal{S}_1 \cap \mathcal{S}_2) = 0$ so $\mathcal{S}_1 \cap \mathcal{S}_2 = \{\mathbf{0}\}$. Let $\mathbf{x} \in \mathcal{S}_1 + \mathcal{S}_2$ and assume $\mathbf{x}$ can be decomposed in two ways: $\mathbf{x} = \mathbf{u}_1 + \mathbf{u}_2 = \mathbf{v}_1 + \mathbf{v}_2$, where $\mathbf{u}_1, \mathbf{v}_1 \in \mathcal{S}_1$ and $\mathbf{u}_2, \mathbf{v}_2 \in \mathcal{S}_2$. Then $\mathbf{u}_1 - \mathbf{v}_1 = -(\mathbf{u}_2 - \mathbf{v}_2)$, indicating that the vectors $\mathbf{u}_1 - \mathbf{v}_1$ and $\mathbf{u}_2 - \mathbf{v}_2$ belong to both $\mathcal{S}_1$ and $\mathcal{S}_2$ and thus must be $\mathbf{0}$. Therefore $\mathbf{u}_1 = \mathbf{v}_1$ and $\mathbf{u}_2 = \mathbf{v}_2$.

$3 \Rightarrow 1$: We only need to show $\mathcal{S}_1 \cap \mathcal{S}_2 = \{\mathbf{0}\}$. Let $\mathbf{x} \in \mathcal{S}_1 \cap \mathcal{S}_2$. Decompose $\mathbf{x}$ in two ways: $\mathbf{x} = \mathbf{x} + \mathbf{0} = \mathbf{0} + \mathbf{x}$. Then by the uniqueness part of 3, $\mathbf{x}=\mathbf{0}$. So the only possible element in $\mathcal{S}_1 \cap \mathcal{S}_2$ is $\mathbf{0}$.

Equivalence of properties 1, 2, 3 means we can take any of them to generalize the definition of direct sum to more than two subspaces.

BR Theorem 6.3. Let $\mathcal{S}_1, \ldots, \mathcal{S}_k$ be subspaces of same order. Following statements are equivalent:

- $(\mathcal{S}_1 + \cdots + \mathcal{S}_i) \cap \mathcal{S}_{i+1} = \{\mathbf{0}\}$ for $i=1,\ldots,k-1$.

- $\text{dim}(\mathcal{S}_1 + \cdots + \mathcal{S}_k) = \text{dim}(\mathcal{S}_1) + \cdots + \text{dim}(\mathcal{S}_k)$.

Any vector in $\mathbf{x} \in \mathcal{S}_1 + \cdots + \mathcal{S}_k$ can be uniquely expressed as $$ \mathbf{x} = \mathbf{x}_1 + \cdots + \mathbf{x}_k, \text{ where } \mathbf{x}_i \in \mathcal{S}_i, i=1,\ldots,k. $$

Proof: BR p162-163.

Projection¶

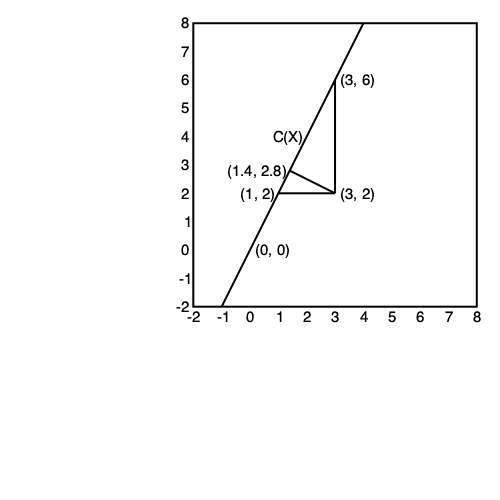

BR Definition 6.3. Let $\mathbb{R}^n = \mathcal{S} \oplus \mathcal{T}$ and $\mathbf{y} = \mathbf{x} + \mathbf{z}$ be the unique representation of $\mathbf{y} \in \mathbb{R}^n$ with $\mathbf{x} \in \mathcal{S}$ and $\mathbf{y} \in \mathcal{T}$. Then

- the vector $\mathbf{x}$ is called the projection of $\mathbf{y}$ into $\mathcal{S}$ along $\mathcal{T}$;

- the vector $\mathbf{z}$ is called the projection of $\mathbf{y}$ into $\mathcal{T}$ along $\mathcal{S}$.

Let $\mathbb{R}^n = \mathcal{S} \oplus \mathcal{T}$. We call a matrix $\mathbf{P} \in \mathbb{R}^{n \times n}$ a projection matrix or projector into $\mathcal{S}$ along $\mathcal{T}$ if, for any $\mathbf{y} \in \mathbb{R}^n$, $\mathbf{P} \mathbf{y}$ is the projection of $\mathbf{y}$ into $\mathcal{S}$ along $\mathcal{T}$.

BR Corollary 6.5. If $\mathbf{P}$ is a projector into $\mathcal{S}$ along $\mathcal{T}$, then

- $\mathbf{P} \mathbf{x} = \mathbf{x}$ for all $\mathbf{x} \in \mathcal{S}$.

$\mathbf{P} \mathbf{y} = \mathbf{0}$ for all $\mathbf{y} \in \mathcal{T}$.

Proof: For 1, since $\mathbf{x} = \mathbf{x} + \mathbf{0}$, where $\mathbf{x} \in \mathcal{S}$ and $\mathbf{0} \in \mathcal{T}$. By uniqueness of the projection, $\mathbf{P} \mathbf{x}$ must be equal to $\mathbf{x}$. For 2, $\mathbf{y} = \mathbf{0} + \mathbf{y}$ is the unique decomposition. Then $\mathbf{P} \mathbf{y}$ must be $\mathbf{0}$.

A square matrix $\mathbf{P}$ is said to be idempotent if $\mathbf{P}^2 = \mathbf{P}$.

BR Theorem 6.4. Following statements about a square matrix $\mathbf{P} \in \mathbb{R}^{n \times n}$ are equivalent:

- $\mathbf{P}$ is a projector.

- $\mathbf{P}$ and $\mathbf{I} - \mathbf{P}$ are idemponent. That is $\mathbf{P}^2 = \mathbf{P}$ and $(\mathbf{I} - \mathbf{P})^2 = \mathbf{I} - \mathbf{P}$.

- $\mathcal{N}(\mathbf{P}) = \mathcal{C}(\mathbf{I} - \mathbf{P})$.

- $\text{rank}(\mathbf{P}) + \text{rank}(\mathbf{I} - \mathbf{P}) = n$.

$\mathbb{R}^n = \mathcal{C}(\mathbf{P}) \oplus \mathcal{C}(\mathbf{I} - \mathbf{P})$.

We can use any of these five statements as a definition of a projector.Proof: We show $1 \Rightarrow 2 \Rightarrow 3 \Rightarrow 4 \Rightarrow 5 \Rightarrow 1$.

$1 \Rightarrow 2$: Suppose $\mathbf{P}$ is a projector into $\mathcal{S}$ along $\mathcal{T}$, where $\mathcal{S} \oplus \mathcal{T} = \mathbb{R}^n$. By BR Corollary 6.2, $\mathbf{P}^2 \mathbf{y} = \mathbf{P}(\mathbf{P} \mathbf{y}) = \mathbf{P} \mathbf{y}$ for any $\mathbf{y} \in \mathbb{R}^n$. Now taking $\mathbf{y} = \mathbf{e}_1, \ldots, \mathbf{e}_n$, we showed each column of $\mathbf{P}^2$ is equal to the corresponding column of $\mathbf{P}$. Therefore $\mathbf{P}^2 = \mathbf{P}$. Finally $(\mathbf{I} - \mathbf{P})^2 = \mathbf{I} - 2\mathbf{P} + \mathbf{P}^2 = \mathbf{I} - \mathbf{P}$.

$2 \Rightarrow 3$: To show $\mathcal{N}(\mathbf{P}) \supseteq \mathcal{C}(\mathbf{I} - \mathbf{P})$, \begin{eqnarray*} & & \mathbf{x} \in \mathcal{C}(\mathbf{I} - \mathbf{P}) \\ &\Rightarrow& \mathbf{x} = (\mathbf{I} - \mathbf{P}) \mathbf{v} \text{ for some } \mathbf{v} \\ &\Rightarrow& \mathbf{P} \mathbf{x} = \mathbf{P} (\mathbf{I} - \mathbf{P}) \mathbf{v} = (\mathbf{P} - \mathbf{P}^2) \mathbf{v} = \mathbf{0}_{n \times n} \mathbf{v} = \mathbf{0} \\ &\Rightarrow& \mathbf{x} \in \mathcal{N}(\mathbf{P}). \end{eqnarray*} To show $\mathcal{N}(\mathbf{P}) \subseteq \mathcal{C}(\mathbf{I} - \mathbf{P})$, \begin{eqnarray*} & & \mathbf{x} \in \mathcal{N}(\mathbf{P}) \\ &\Rightarrow& \mathbf{P} \mathbf{x} = \mathbf{0} \\ &\Rightarrow& \mathbf{x} = \mathbf{x} - \mathbf{P} \mathbf{x} = (\mathbf{I} - \mathbf{P}) \mathbf{x} \\ &\Rightarrow& \mathbf{x} \in \mathcal{C}(\mathbf{I} - \mathbf{P}). \end{eqnarray*} $3 \Rightarrow 4$: By the Rank-Nullity theorem, $$ \text{rank}(\mathbf{P}) = n - \text{nullity}(\mathbf{P}) = n - \text{dim}(\mathcal{N}(\mathbf{P})) = n - \text{dim}(\mathcal{C}(\mathbf{I} - \mathbf{P})) = n - \text{rank}(\mathcal{C}(\mathbf{I} - \mathbf{P})). $$

$4 \Rightarrow 5$: For any $\mathbf{x} \in \mathbb{R}^n$, $\mathbf{x} = (\mathbf{P} + \mathbf{I} - \mathbf{P}) \mathbf{x} = \mathbf{P} \mathbf{x} + (\mathbf{I} - \mathbf{P}) \mathbf{x}$. Thus $\mathbb{R}^n = \mathcal{C}(\mathbf{P}) + \mathcal{C}(\mathbf{I} - \mathbf{P})$. Part 2 of BR Theorem 6.2 then dictates $\mathbf{P}$ and $\mathbf{I} - \mathbf{P}$ must be a direct sum.

$5 \Rightarrow 1$: For any $\mathbf{x} \in \mathbb{R}^n$, $\mathbf{x} = (\mathbf{P} + \mathbf{I} - \mathbf{P}) \mathbf{x} = \mathbf{P} \mathbf{x} + (\mathbf{I} - \mathbf{P}) \mathbf{x}$, where $\mathbf{P} \mathbf{x} \in \mathcal{C}(\mathbf{P})$ and $(\mathbf{I} - \mathbf{P}) \mathbf{x} \in \mathcal{C}(\mathbf{I} - \mathbf{P})$. 5 then confirms that $\mathbf{P}$ projects into $\mathcal{C}(\mathbf{P})$ along $\mathcal{C}(\mathbf{I} - \mathbf{P})$.

BR Theorem 6.5. Let $\mathbf{P} \in \mathbb{R}^{n \times n}$ has rank $r$ and $\mathbf{P} = \mathbf{S} \mathbf{U}'$ be a rank factorization of $\mathbf{P}$. Then $\mathbf{P}$ is a projector if and only if $\mathbf{U}' \mathbf{S} = \mathbf{I}_r$.

Proof: Since $\mathbf{P} = \mathbf{S} \mathbf{U}'$ is a rank factorization, we have $\mathbf{S} \in \mathbb{R}^{n \times r}$ and $\mathbf{U'} \in \mathbb{R}^{r \times n}$.

If $\mathbf{U}' \mathbf{S} = \mathbf{I}_r$, then $$ \mathbf{P}^2 = \mathbf{S} \mathbf{U}' \mathbf{S} \mathbf{U}' = \mathbf{S} \mathbf{I}_r \mathbf{U}' = \mathbf{S} \mathbf{U}' = \mathbf{P}. $$ If $\mathbf{P}$ is a projector, then $\mathbf{P}$ is idemponent. That is $$ \mathbf{S} \mathbf{U}' \mathbf{S} \mathbf{U}' = \mathbf{S} \mathbf{U}'. $$ Since $\mathbf{P} = \mathbf{S} \mathbf{U}'$ is a rank factorization, $\text{rank}(\mathbf{S}) = \text{rank}(\mathbf{P}) = r$. That is rows of $\mathbf{S}$ span $\mathbb{R}^r$. Thus there exists a matrix $\mathbf{S}_L$ such that $\mathbf{S}_L \mathbf{S} = \mathbf{I}_r$. Similarly $\mathbf{U}'$ having full column rank implies that there exists a matrix $\mathbf{U}'_R$ such that $\mathbf{U}' \mathbf{U}'_R = \mathbf{I}_r$. Pre-multiplying by $\mathbf{S}_L$ and post-multiplying by $\mathbf{U}'_R$ on both sides of above equation $$ \mathbf{S}_L \mathbf{S} \mathbf{U}' \mathbf{S} \mathbf{U}' \mathbf{U}'_R = \mathbf{S}_L \mathbf{S} \mathbf{U}' \mathbf{U}'_R. $$ gives the desired identity $\mathbf{U}' \mathbf{S} = \mathbf{I}_r$.BR Theorem 6.6. Let $\mathbf{P}$ be a projector, or equivalently idempotent. Then $\text{tr}(\mathbf{P}) = \text{rank}(\mathbf{P})$.

Proof: By BR Theorem 6.5, $$ \text{tr}(\mathbf{P}) = \text{tr}(\mathbf{S} \mathbf{U}') = \text{tr}(\mathbf{U}' \mathbf{S}) = \text{tr}(\mathbf{I}_r) = r = \text{rank}(\mathbf{P}). $$

Example: How to construct a $\mathbf{P} \in \mathbb{R}^n$ that projects into the line $\text{span}(\{\mathbf{1}_n\})$?

using LinearAlgebra

n = 5

# this P is symmetric, thus indeed an orthogonal projector

P = (1/n) * ones(n, n)

# idempotent

P^2

tr(P)

rank(P), rank(I - P)

# let's try a non-orthogonal projection: P = c * ones(n) * v'

using Random

Random.seed!(216)

v = randn(n)

c = 1 / sum(v)

P = c * ones(n) * v'

P * P

tr(P)

rank(P), rank(I - P)

Cochran theorem¶

This theorem is important for deriving F test in linear models.

BR Theorem 6.9. Let $\mathbf{P}_1, \ldots, \mathbf{P}_k$ be $n \times n$ matrices with $\sum_{i=1}^k \mathbf{P}_i = \mathbf{I}_n$. Then the following statements are equivalent:

- $\sum_{i=1}^k \text{rank}(\mathbf{P}_i) = n$.

- $\mathbf{P}_i \mathbf{P}_j = \mathbf{O}$ for $i \ne j$.

$\mathbf{P}_i^2 = \mathbf{P}_i$ for $i=1,\ldots,k$. That is each $\mathbf{P}_i$ is a projector.

Proof: We will prove $1 \Rightarrow 2 \Rightarrow 3 \Rightarrow 1$.

Proof of $1 \Rightarrow 2$: Let $r_i = \text{rank}(\mathbf{P}_i)$ and $\mathbf{P}_i = \mathbf{S}_i \mathbf{U}_i'$ be a rank factorization of $\mathbf{P}_i$ for $i=1,\ldots,k$, where $\mathbf{S}_i \in \mathbb{R}^{n \times r_i}$ and $\mathbf{U}_i' \in \mathbb{R}^{r_i \times n}$ with $\text{rank}(\mathbf{S}_i) = \text{rank}(\mathbf{U}_i) = r_i$. Then \begin{eqnarray*} & & \sum_{i=1}^k \mathbf{P}_i = \mathbf{I}_n \\ &\Rightarrow& \mathbf{S}_1 \mathbf{U}_1' + \cdots + \mathbf{S}_k \mathbf{U}_k' = \mathbf{I}_n \\ &\Rightarrow& \begin{pmatrix} \mathbf{S}_1 : \cdots : \mathbf{S}_k \end{pmatrix} \begin{pmatrix} \mathbf{U}_1' \\ \vdots \\ \mathbf{U}'_k \end{pmatrix} = \mathbf{I}_n \\ &\Rightarrow& \mathbf{S} \mathbf{U}' = \mathbf{I}_n, \end{eqnarray*} where $$ \mathbf{S} = \begin{pmatrix} \mathbf{S}_1 : \cdots : \mathbf{S}_k \end{pmatrix}, \quad \mathbf{U}' = \begin{pmatrix} \mathbf{U}_1' \\ \vdots \\ \mathbf{U}'_k \end{pmatrix}. $$ Since $\sum_{i=1}^k r_i = n$, $\mathbf{S}, \mathbf{U}$ must be square and $\mathbf{I}_n = \mathbf{S} \mathbf{U}'$ is a rank factorization. $\mathbf{I}_n$ apparently is idempotent thus we have $$ \mathbf{U}' \mathbf{S} = \mathbf{I}_n = \begin{pmatrix} \mathbf{I}_{r_1} & \cdots & \mathbf{O} \\ \vdots & \ddots & \vdots \\ \mathbf{O} & \cdots & \mathbf{I}_{r_k} \end{pmatrix}. $$ Therefore $\mathbf{U}_i' \mathbf{S}_j = \mathbf{O}$ whenver $i \ne j$, implying $$ \mathbf{P}_i \mathbf{P}_j = \mathbf{S}_i \mathbf{U}_i' \mathbf{S}_j \mathbf{U}_j' = \mathbf{O} $$ whenever $i \ne j$.

Proof of $2 \Rightarrow 3$: Since $\sum_{i=1}^k \mathbf{P}_i = \mathbf{I}_n$, from 2, $$ \mathbf{P}_j = \mathbf{P}_j \left( \sum_{i=1}^k \mathbf{P}_i \right) = \mathbf{P}_j^2 + \sum_{i \ne j} \mathbf{P}_i \mathbf{P}_j = \mathbf{P}_j^2. $$

Proof of $3 \Rightarrow 1$: $\mathbf{P}_i$ are idemponent, so $\text{rank}(\mathbf{P}_i) = \text{tr}(\mathbf{P}_i)$ for all $i$. $\sum_{i=1}^k \text{rank}(\mathbf{P}_i) = \sum_{i=1}^k \text{tr}(\mathbf{P}_i) = \text{tr}(\sum_i \mathbf{P}_i) = \text{tr}(\mathbf{I}_n) = n$.